How not to use generative AI

July 13th, 2024 at 9:06 am (Finances, Technology)

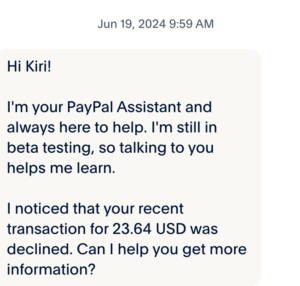

The other day, I couldn’t find some information I needed on the PayPal site, so I engaged with their generative AI chatbot. Before I could type anything, it launched in with this comment:

Hi Kiri!

I’m your PayPal Assistant and always here to help. I’m still in beta testing, so talking to you helps me learn.

I noticed that your recent transaction for 23.64 USD was declined. Can I help you get more information?

I replied “yes” and it gave me a generic link to reasons why a transaction could be declined. It refused to give me any information about the transaction it referred to.

I couldn’t find any such transaction in my account history. I therefore had to call a human on their customer service line to ask. Sure enough, they confirmed there was no such transaction. The chatbot simply made it up.

If I ran PayPal, I’d be terribly embarrassed – no one needs a financial service that generates red herrings like this – and I would turn the thing off until I could test and fix it. Given that this happened to me before I typed anything to the chatbot, you can bet it’s happening to others. If they were hoping the chatbot would save them on human salaries, all it did was create extra work for me and their customer service representative, who could have been helping solve a real problem, not one fabricated by their own chatbot.

I asked if there was somewhere to send the screenshot so they could troubleshoot it. I was told to email it to service@paypal.com . I got an auto-reply that said “Thanks for contacting PayPal. We’re sorry to inform you that this email address is no longer active.” Instead, it directed me to their help pages and to click “Message Us” which… you guessed it… opens a new dialog with the same chatbot.

This careless use of generative AI technology is a growing problem everywhere. A generative AI system is designed to _generate_ (i.e., make up) things. It employs randomness and abstraction to avoid simple regurgitation. This makes it great for writing poetry or brainstorming. But this means it is not (on its own) capable of looking up facts. It is quite clearly not the tool to use to describe, manage, or address financial services. Would you use a roulette wheel to balance your checkbook?

PayPal is exhibiting several problems here, all of which are correctable:

1. Lack of knowledge about AI technology strengths and limitations

2. Decision to deploy the AI technology despite not understanding it

3. Lack of testing of their AI product

4. No mechanism to receive reports of errors, limiting the ability to detect and correct problems

I hope to see future improvement. For now, this is a good cautionary tale for everyone rushing to integrate AI everywhere.

Jim said,

August 12, 2024 at 8:04 pm

That initial interaction would be very useful if it were at all true (and the resulting link was something other than a generic page — a sin I see all too often.)

I get the feeling that a lot of businesses are in the “throw AI up on the wall and see what sticks” phase, where the result is the four correctable problems you cite.