Reassess This

March 25th, 2009 at 10:46 pm (Finances, Society)

As a homeowner, I’m used to getting all sorts of shady offers in the mail for new mortgages with astoundingly bad terms. But now that home values are declining, the free market has spawned a new kind of scam, at least in California. In our fair state, home values are reassessed only when they are sold (hoo boy, Prop 13!). In the meantime, the County Assessor assumes that your home value increases by about 2% each year and increases your property taxes accordingly. Historically, this has been a win for homeowners, whose property value was outpacing 2% by leaps and bounds, and a increasingly problematic loss for any local tax-supported services (such as school funding).

Anyway, these new offers take the form of a letter warning you that your home is probably worth less than the county thinks it is, and giving you the opportunity to pay a third party company to file a “tax reassessment” form to have the property properly revalued (and get a lower property tax bill). What makes this such a miserable scam is that anyone can file this form themselves, for free. Here are online instructions, with the online form. Not only that, but the County Assessor is pre-emptively re-assessing 500,000 homes this year (sold between 2003 and 2008) to see if they should be adjusted — you don’t even have to file the form! The County Assessor’s office is clearly exasperated with this scam, too, and has posted a scam warning on the subject.

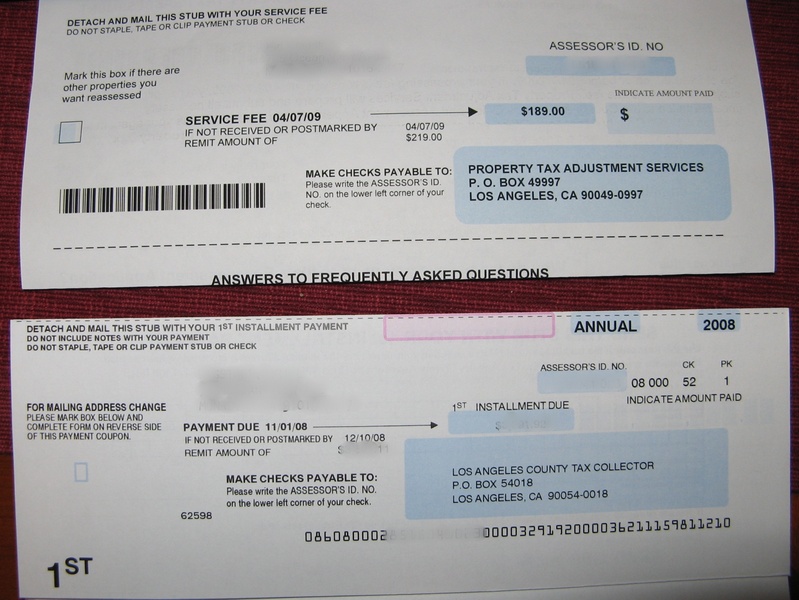

Recently, I received one of these offers that really took the cake. Not only did the letter from “Property Tax Adjustment Services” try to entice me to pay for a free service, but it actually came formatted as a bill — complete with a “due date” and a “late charge” if payment was not received by the deadline! As I stared at the “bill”, it seemed strangely familiar… so familiar that I went and dug up my actual property tax bill. They are formatted virtually identically. See image at right (click to enlarge). The “reassessment bill” is on top, and my property tax bill is on the bottom (actual numbers removed). Obviously they’re hoping that I as a busy homeowner might glance at this and think it comes from the County Assessor’s office and is a required payment.

Recently, I received one of these offers that really took the cake. Not only did the letter from “Property Tax Adjustment Services” try to entice me to pay for a free service, but it actually came formatted as a bill — complete with a “due date” and a “late charge” if payment was not received by the deadline! As I stared at the “bill”, it seemed strangely familiar… so familiar that I went and dug up my actual property tax bill. They are formatted virtually identically. See image at right (click to enlarge). The “reassessment bill” is on top, and my property tax bill is on the bottom (actual numbers removed). Obviously they’re hoping that I as a busy homeowner might glance at this and think it comes from the County Assessor’s office and is a required payment.

This scam letter actually does mention the fact that you can file the form yourself (but not that it’s free to do so). It also warns that “Property Tax Adjustment Services” is an expert business who will ensure that it gets done right. Yeah. The form requires all of three pieces of information: your home’s address and the addresses of two comparable recent sales. This information is available easily from the County Assessor’s website, which even has a browsable map interface so you can see all recent sales near your home.

Disgusting, is what it is. Or simple capitalism in action? Caveat emptor!

As I opened up the containers, I wondered how exactly you could, in fact, reduce the fat in peanut butter. Although commercial peanut butter does have added oils “to prevent separation”, most of the fat actually comes from the peanuts themselves. How do you get a low-fat peanut? Answer: you don’t! While of course I don’t have the recipe that Skippy uses, perusing the ingredient lists of the two products suggests that you reduce the fat by… diluting the peanuts. The same non-separation oils are used, but reduced-fat peanut butter also comes with “soy protein” and “corn syrup solids” not present in the regular variety. The total protein per serving is the same in both products, so I can only imagine that the soy protein is there to make up the balance after diluting the peanuts (and their protein). The corn syrup solids are apparently there to make the product sweeter — and in fact the nutrition label reports more sugar (4g vs. 3g) in

As I opened up the containers, I wondered how exactly you could, in fact, reduce the fat in peanut butter. Although commercial peanut butter does have added oils “to prevent separation”, most of the fat actually comes from the peanuts themselves. How do you get a low-fat peanut? Answer: you don’t! While of course I don’t have the recipe that Skippy uses, perusing the ingredient lists of the two products suggests that you reduce the fat by… diluting the peanuts. The same non-separation oils are used, but reduced-fat peanut butter also comes with “soy protein” and “corn syrup solids” not present in the regular variety. The total protein per serving is the same in both products, so I can only imagine that the soy protein is there to make up the balance after diluting the peanuts (and their protein). The corn syrup solids are apparently there to make the product sweeter — and in fact the nutrition label reports more sugar (4g vs. 3g) in